From an email I sent today:

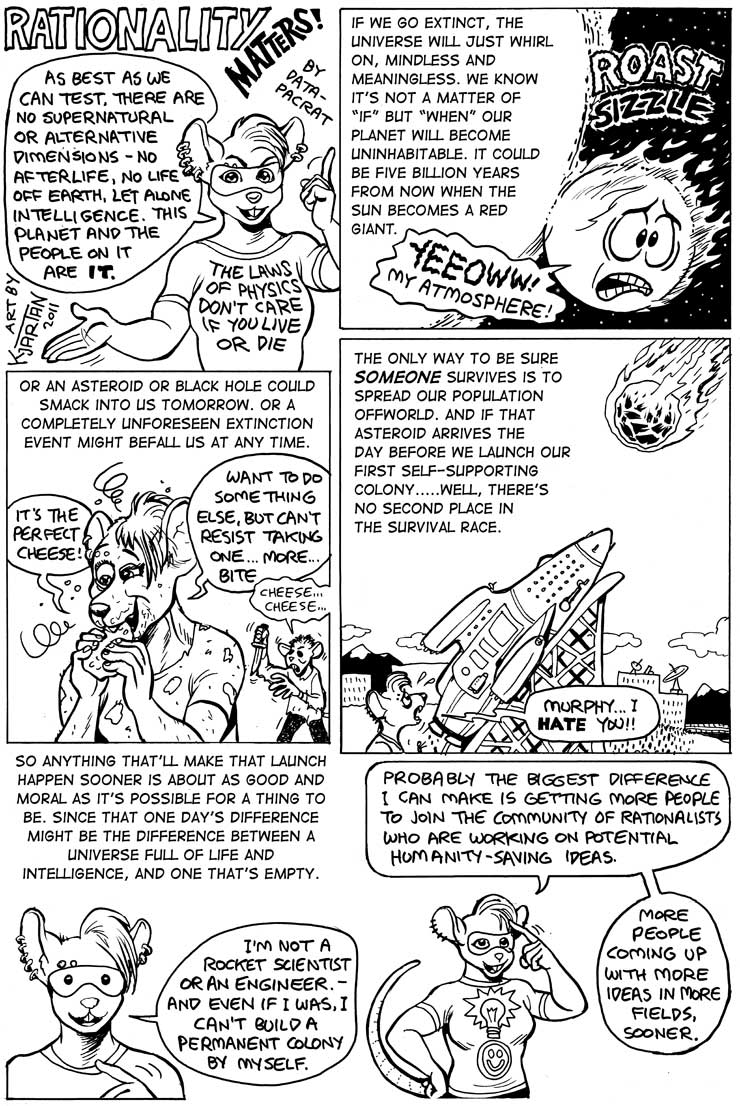

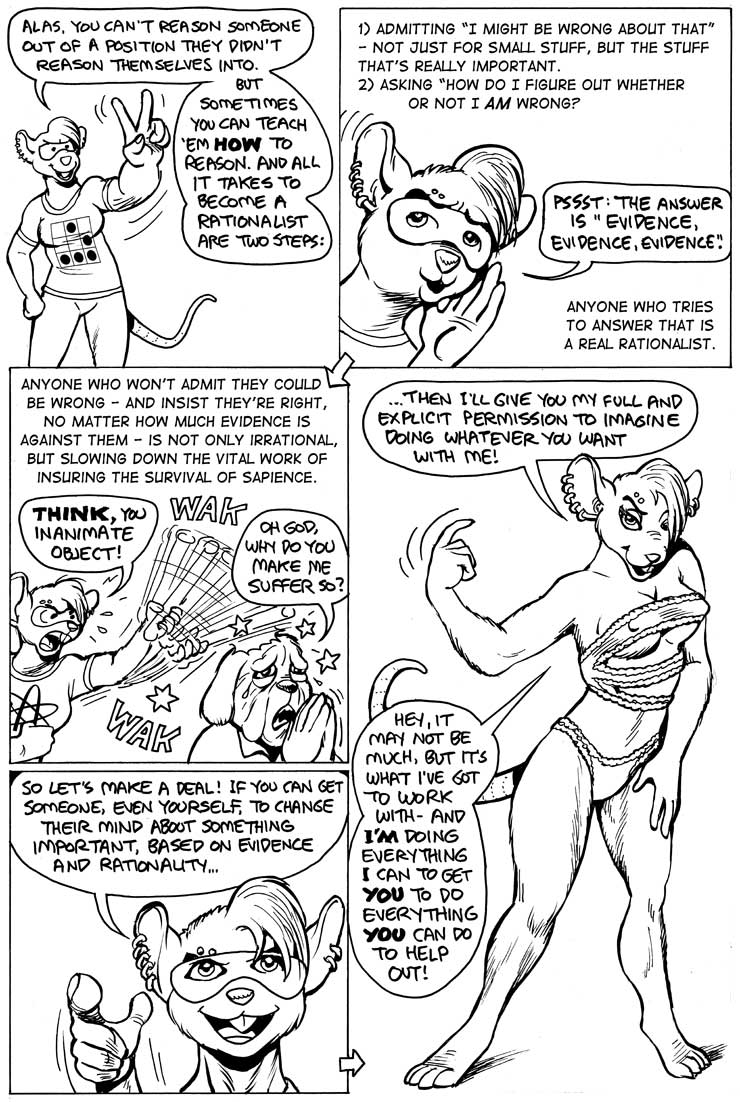

I’ve found a few meta-rules to be handy in working out the

rules-of-thumb. Aiming for the overall goal of avoiding the extinction

of sapience in the universe could be considered one; another is that

it’s generally infeasible for there to be one rule that applies to

myself and another rule that applies to everyone else; and yet another

is that if there’s a way for one person to end up worse than the other

as the result of an interaction, it’s safe to assume that I’m more

likely than not to end up on the worse side of things (ie, my ‘not as

smart as I like to think’ quote), so it’s worthwhile to try ensuring

that the worse that can happen is the best possible. (This latter

meta-rule has, in the present-day, tended to nudge me in the direction

of favouring labour over mercantalist oligarchs, though I haven’t

signed up with the IWW quite yet.)

…

As of a couple of years ago, one of the meta-rules I relied on most

heavily was “I’m a selfish bastard, but I’m a /smart/ selfish bastard

interested in my /long-term/ self-interest.” (This meta-rule has since

become, at best, secondary to preventing sapience’s extinction, if for

no other reason than if all sapience dies out, I would, too.) This

resulted in what I called the “Trader’s Definition” for personhood,

and for granting whatever rights are inherent in personhood: if some

entity can choose whether or not to exchange a banana for a backrub,

or some programming for some playtime, then it’s in my own

self-interest to have everyone treat that entity /as if/ it were a

person. Whether or not it meets any particular philosophical

definitions of self-awareness, the mechanisms that underlie the

efficiencies of a competitive marketplace don’t seem to care much

whether any particular economic agent has any particular level of

consciousness. This idea also meets some of the other meta-rules, as

it’s a rule that applies as equally to me as to anyone else; and even

if some enhanced transhuman thinks only transhumans should have

rights, the principle of comparative advantage means that even if such

a transhuman is better than me at everything, I can still contribute

to the overall economy through the principle of comparative advantage,

and thus still should receive enough rights to allow me to be a

participant in such economy.

This definition also allows reasonably simple extensions to decide

about children and adults with disabilities. The former still have the

potential to become economically-functioning adults, so it’s

worthwhile to ensure they have enough rights to maximize the chances

that they will do so. (Even fetuses can be considered under this

criteria, though the mother’s rights also have to be considered, and

can easily outweigh the rights of the fetus.) The latter is a

situation where any individual has a chance of ending up, so in order

to ensure your own future is as comfortable as possible, it’s

reasonable to grant such people enough rights to allow a life with as

much dignity as possible. In general, animals are unable to make

choices, and humans generally don’t turn into them, so there’s no real

impetus to give them any rights. (However, as humans do have mirror

neurons that allow them to subjectively feel what they think other

beings are feeling, including animals, there does exist an impetus to

reduce animal suffering in order to reduce human suffering, but that’s

not quite the same thing, and there are plenty of other forms of human

suffering which are a higher priority.)

With a bit of squinting, a number of potential technologies can be

viewed through this lens, as well. A classic SF trope is to turn

ordinary animals into uplifted animals to act as a new servant class;

if it really does become possible to upgrade a dog into something

which can do a human-level job, such a creature would almost by

definition be able to do all manner of human-level tasks, which makes

it worthwhile to let them figure out which job gives them the best

comparative advantage, freeing humans to work at whatever gives /them/

the best comparative advantage, ending up with everyone’s lives being

better all around.

I’ve recently read a book which has given me a further perspective on

all of the above: “The Dictator’s Handbook: Why Bad Behavior is Almost

Always Good Politics”, by Bruce Bueno de Mesquita and Alastair Smith.

Their thesis is that all political organizations can be sorted by how

many people the leaders depend on to stay in power; and one of their

conclusions is that once such leaders require the support of a

significant part of the population, their goals shift from satisfying

their inner circle by essentially bribing them to satisfying the

larger public by implementing public policy that benefits their outer

circle. A further conclusion is that even in a nominally democratic

system, the particular details of the election process may mean that

the [potential] leaders only have to pay attention to a surprisingly

small part of the overall electorate; and thus, in order to induce the

[potential] leaders to create policies that benefit as much of the

public as possible, it’s worthwhile to try to work on certain forms of

electoral reform. Lessig’s “Rootstrikers” project seems to be one of

the best available groups working on this idea.

To put this in more concrete terms, and relate it to the above

discussion on rights: If a first-past-the-post electoral system is in

place, and certain districts are almost certain to vote one way or

another, then the campaigning politicians have very little incentive

to offer those districts any benefits, compared to the benefits they

offer to swing districts. And even within those swing districts, there

are various blocs to court, some of which may already be committed to

one party or another. The incentives faced by the leaders are to focus

only on improving the lives of those groups who might make a

significant difference to their re-election campaign. There’s no

particular incentive to those leaders to give any rights at all to,

say, uplifted dogs, unless doing so gives them a competitive

advantage; either by improving the lives of the swing voters they’re

focusing on, or if it seems likely that giving said uplifts the right

to vote will give their own campaign more votes than the opponent. The

main benefit gained by giving rights to uplifts is the overall

improvement of the economy, which is an extremely broad-based and

generalized improvement, and doesn’t do very much to improve the lives

of, say, the porn-farmers’ lobby; and so the way which seems to

maximize the odds of ensuring that uplifts receive such rights is to

maximize the number of people any particular politician has to court

in order to win an election, such as by working on cutting down on

gerrymandering.