Transit of Venus

Transit of Venus, through welder’s glass, at 7:55pm Eastern. — at Torosian Park.

Why should I believe that?

Transit of Venus

Transit of Venus, through welder’s glass, at 7:55pm Eastern. — at Torosian Park.

Rules-of-thumb are handy, in that they let you use a solution you’ve figured out beforehand without having to take the time and effort to re-derive it in the heat of the moment. They may not apply in all situations, they may not provide the absolutely maximally best answer, but in situations where you have limited time to come up with an answer, they can certainly provide the best answer that it’s possible for you to come up with in the time you have to think about it.

I’m currently seeking fairly fundamental rules-of-thumb, which can serve as overall ethical guidelines, or even as the axioms for a full ethical system; and preferably ones that can pass at least the basic sniff-test of actually being usable in everyday life; so that I can compare them with each other, and try to figure out ahead of time whether any of them would work better than the others, either in specific sorts of situations or in general.

Here are a few examples of what I’m thinking of:

* Pacifism. Violence is bad, so never use violence. In game theory, this would be the ‘always cooperate’ strategy of the Iterated Prisoner’s Dilemma, and is the simplest strategy that satisfies the criteria of being ‘nice’.

* Zero-Aggression Principle. Do not /initiate/ violence, but if violence is used against you, act violently in self-defense. The foundation of many variations of libertarianism. In the IPD, this satisfies both the criteria of being ‘nice’ and being ‘retaliating’.

* Proportional Force. Aim for the least amount of violence to be done: “Avoid rather than check, check rather than harm…”. This meets being ‘nice’, ‘retaliating’, and in a certain sense, ‘forgiving’, for the IPD.

I’m hoping to learn of rules-of-thumb which are at least as useful as the ZAP; I know and respect certain people who base their own ethics on the ZAP, but reject the idea of proportional force, and am hoping to learn of additional alternatives so I can have a better idea of the range of available options.

Any suggestions?

Does something like this seem to you to be a reasonable rule of thumb, for helping handle scope insensitivity to low probabilities?

There’s a roughly 30 to 35 out of a million chance that you will die on any given day; and so if I’m dealing with a probability of one in a million, then I ‘should’ spend 30 times as much time preparing for my imminent death within the next 24 hours as I do playing with the one-in-a-million shot. If it’s not worth spending 30 seconds preparing for dying within the next day, then I should spend less than one second dealing with that one-in-a-million shot.

Relatedly, can you think of a way to improve it, such as to make it more memorable? Are there any pre-existing references – not just to micromorts, but to comparing them to other probabilities – which I’ve missed?

Superfreighter

Superfreighter

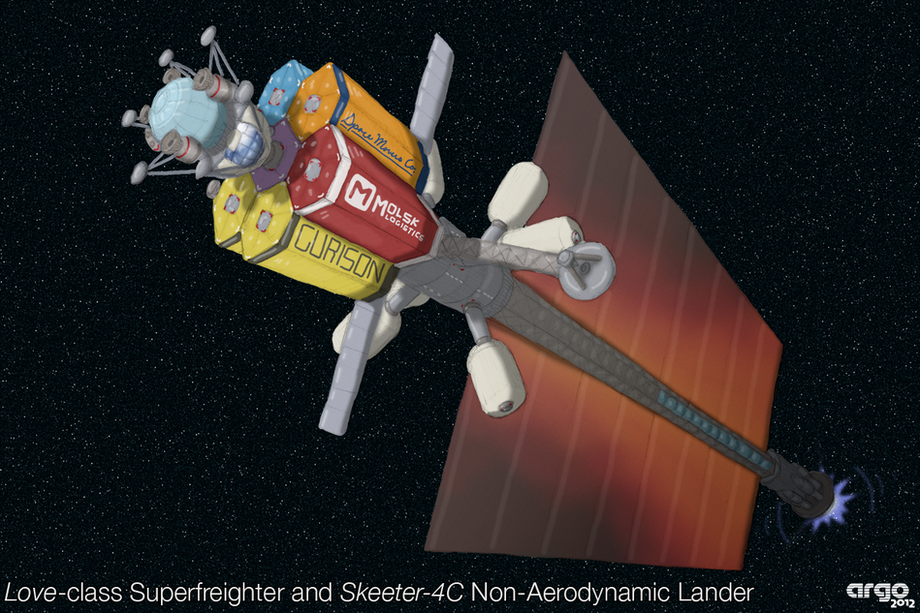

As Dee and her nakama build a colony for themselves in the asteroids, they find a need for a workhorse vessel – and design a class of spaceship which fills all their needs, and then some.

The inital model used for the first trips, the SF-0 “Zip”, had a somewhat different drive configuration than became standard. Once it was rebuilt with the new manufacturing processes available on the V-type asteroids, it was redesignated the SF-1 “Love”. The next ships sharing the design were the SF-2 “Unity”, SF-3 “Imagination”, SF-4 “Napier”, SF-5 “Ludolph”, and SF-6 “Euler”. Most official markings are English written in the Unifon script.

Crew: 8

Dry Mass: 135 tonnes

Typical fuel mass: 240 tonnes

Rated cargo mass: 440 tonnes

Main thruster: antiproton-catalyzed n-6Li microfission

From left to right: Non-aerodynamic lander; containerized cargo; small heat radiators for hab section; main comm antenna; a pair of counter-rotating sections, each with three inflatable habitat modules; the main boom and radiators, with tanks for water-propellant inside the boom; and the main drive.

Artwork by http://

Further details: http://www.datapacrat.com/

Given how much you have learned of the techniques of rationality, of Bayesian updates and standard of evidence, of curiosity being the first virtue and being willing to update your beliefs… have any of your dreams been affected by them?

The reason I ask; I’m reading the entirely of the Sequences, and am about an eighth of the way through. And I’ve just woken from a dream whose plot was somewhat unusual. I had noticed some mildly strange animals and/or people, and upon trying to find out what was going on, discovered a small riverside camp of people who fell well outside what I understood to be the realm of human variation. The person I had started investigating with then claimed to be a god, or if I preferred, a vastly powerful and intelligent alien entity, and offered to do something to prove it to me. I remembered that I had once established for myself a standard of evidence for exactly this sort of question – the growth of a new, perfectly functional limb, in a way outside of present medical understanding… and in a few moments, my dream-self was the possesser of a nice, long tail. I had not been expecting that to happen, and noticed I was extremely confused, and deliberately raised my estimate of the probability that I really was talking to a god-like figure by some number of decibans. At the end of the dream, said deity-figure said that he would offer to split us off from his ‘main project’, on a few conditions – one of which was ‘no more clues’, since he had given us ‘more than enough to figure out what’s going on’… … whereupon I questioned a few things, and immediately woke up.

I don’t recall having a dream of anything like that sort before – and I dream in understandable narrative plots so often that I sometimes dream sequels. So I’m curious; is this a normal sort of thing that happens to LessWrongians?

Original, Original, Original, Original

You may or may not already be aware, but there exists a boardgame, Phil Eklund’s “High Frontier”, which is a reasonably realistic simulation of using rockets to putter around the solar system and start industrializing it. The BoardGameGeek reviews are at http://boardgamegeek.com/boardgame/47055/high-frontier .

What you may not have heard is that Mr. Eklund is currently designing an expansion to the original game, in which the goal is to launch STL interstellar colonization attempts… and the theme has a distinctly transhumanist flavour, with the current draft of some of the crew-types being “Immortal group mind”, “Zero-gee pantropists”, “Utility fog robonauts”, and a “Rental body guild”. Mr. Eklund has asked me to help with the flavourtext for those cards – and, if I can find any, some images for the crew cards that can be gotten with no copyright issues, that are better than the current ones. And since some of the best transhumanist-themed art I’ve found is here on DA… would any artists here be willing to have their work become part of a published board game?

This is one of those sleep-deprived middle-of-the-night ideas which I’m reasonably likely to regret posting in the morning once I really wake up – but which, at least at the moment, thinking on my more-corrupted-than-standard hardware, seems like a cool idea.

Most role-playing games have a system for determining whether or not certain actions are successful or not. Most of the time, these can be described as setting a target number, and rolling one or more dice, with various modifiers – eg, you might have to roll a 13 or higher on a twenty-sided dice to correctly answer the sphinx’s riddle, and having your handy Book of Ancient Puzzles to refer to may give you a +3 bonus to your die-roll.

How insane and awful an idea would it be to have an RPG system whose core mechanic wasn’t based on linear probabilities like that… but, instead, on decibels of Bayesian probability? For example, instead of a bonus adding a straight +3 to a d20, or increasing your odds by 15% no matter how easy the task or how skilled you are, the bonus adds +3 decibels: changing your odds from 50% to 66% if you started out with a middling chance, but only increasing it from 90% to 95% if you’re already very skilled.

(And now, back to sleep, and to see how much karma I’ve lost come the morning…)

This mentions some of the limitations of eyewitness testimony; does anybody here have any references giving any hard numbers about how reliable eyewitness accounts are, under any given circumstances?

I’d like to be more conscious about my Bayesian-type updates of my beliefs based on general accounts of what people say. So far, I’ve started using a rule-of-thumb that somebody telling me something is so is worth approximately 1 decibel of belief (1/3rd of a bit); evidence, but about the weakest evidence possible, nulled by any opposing accounts, and countered by any more substansive evidence.

If possible, I’d like to know exactly how reliable such testimony tends to be in one particular set of circumstances – time since the thing being reported, level of emotional involvement, etc – to use as a baseline, and at least roughly how strongly such factors change that. (I’ll actually be very surprised if this particular set of data currently exists in ready form – but I’ll be satisfied if I can get even order-of-magnitude approximations, so that I know whether or not the rules-of-thumb I end up using are at least within plausible spitting distance.)

One of the standard methods of science-fiction world-building is to take a current trend and extrapolate it into the future, and see what comes out. One trend I’ve observed is that over the last century or so, people have kept coming up with clever new ways to find answers to important questions – that is, developing new methods of rationality.

So, given what we do currently know about the overall shape of such methods, from Godel’s Incompleteness Theory to Kolmogorov Complexity to the various ways to get around Prisoner’s Dilemmas… Then, at least in a general science-fictional world-building sense, what might we be able to guess or say about what rationalists will be like in, oh, 50-100 years?

I currently ‘have’ a minor SF setting, for which there exists a few short stories, comics, pictures, and the like, created by a variety of artists and authours. I’d like future contributions to be as rationalist as possible – for as many characters within the setting as possible to be Rationalist!Heroes, or even Rationalist!Villains. Do you have any advice that might help me try to nudge the various amateur artists and authours involved in that direction?

(In case you’re curious, the setting’s current reftext is here. The basic underlying question I’m basing it around is, “As technology advances, it becomes possible for smaller and smaller groups to kill more and more people. How can society survive, let alone develop, when individuals have the power to kill billions?” So far, most of the creations have been developing the overall background and characters, rather than focusing on that question directly.)